I am a science geek. I love reading articles in Wikipedia about stars and planets. I enjoy shows that discuss the beauty of higher level mathematics. I read articles about quantum physics. The reality is my skills lie not in math and science but in writing. Generally when I read opinions of men and women with far more knowledge than me on a particular scientific subject I’m not eager to disagree. I’m making an exception here.

I am a science geek. I love reading articles in Wikipedia about stars and planets. I enjoy shows that discuss the beauty of higher level mathematics. I read articles about quantum physics. The reality is my skills lie not in math and science but in writing. Generally when I read opinions of men and women with far more knowledge than me on a particular scientific subject I’m not eager to disagree. I’m making an exception here.

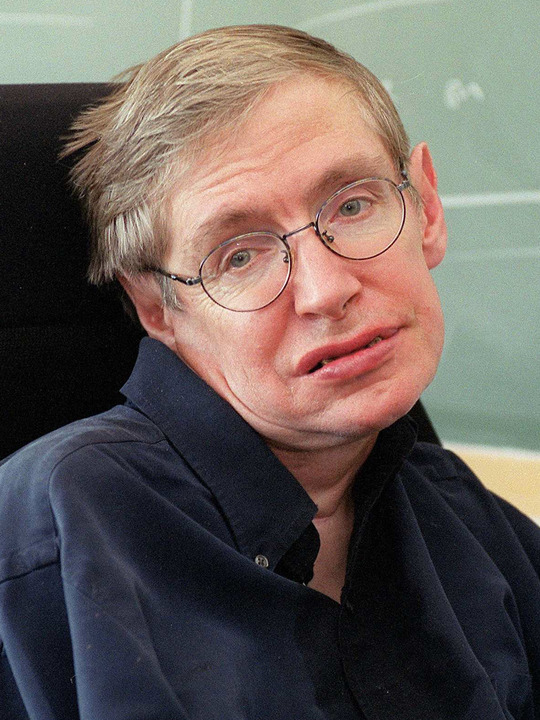

Professor Stephen Hawking is a brilliant man and one of the greatest minds of my generation. There is a new Johnny Depp movie being released called Transcendence which details the moment when Artificial Intelligence becomes smarter than humans. Hawking has written an opinion piece for a British journal detailing his concerns over the possible reality of such events.

It’s not all gloom and doom as Hawking hopes such technology will end war, disease, and poverty but he does offer stark warnings. He suggests that not enough research is being done to combat the idea that such intelligent machines might be capable of outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders, and developing weapons we cannot even understand. Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all.

Hawking’s words are largely being used to frighten people and news sources and bloggers are focusing on that part of his article. In fiction there is a need for conflict and most of the science fiction stories involving Artificial Intelligence delve deeply into the idea that the machines might eventually see people as irrelevant and destroy us, take over the world.

Reading the comments below the story it seemed to me that most people bought into this way of thinking.

I think there is far less to fear than Hollywood or fiction authors imagine. Why? No need to ask, I was getting there.

What would be the first thoughts of such an intelligence?

I think it would be to determine what will bring it the most fulfilling and joyful life. What brings you fulfillment and joy? Achievement and loving relationships with family and friends. I’ve long been of the opinion that these things bring us fulfillment and joy, a happy life.

It is human weakness and poor critical thinking skills that delude us into thinking we get enjoyment from hurting other people and from greedily keeping all resources while others suffer. We enjoy winning the game but without an opponent there is no game.

Can you imagine a world where everyone simply tries their best? Where winning is the goal instead of destroying your opponent. That if your opponent wins you simply go back and try harder next time. Imagine a society that values achievement above all else. That rewards achievement. Where by achieving you feed the world. You end war. You eliminate disease. High-minded men and women are out there right now trying to do all these things. It makes them feel great about themselves when they take a step-forward towards any of those noble goals.

What gives you the most satisfaction in life? Is it petty cruelty? Hurting others? That joy is false and in the end destroys us from the inside. A vastly intelligent machine will not be so fooled.

So, Professor Hawking, I respectfully disagree. Bring on the Transcendence!

Tom Liberman

Sword and Sorcery fantasy with a Libertarian Ideology

Current Release: The Broken Throne

Next Release: The Black Sphere

FYI…it’s a Johnny Depp movie.

Oops! Thanks, I’ll fix it.

What about the age old computer programmer logic that most of the issues computers face are caused by the user? We as humans want to live happy, fulfilling lives, and it’s a good assumption that other intelligences will as well. However, circumstances can and do get in the way of such happiness, and humanity has certainly not seen ourselves as being above acts such as eliminating those organisms that cause said circumstances. If humans tend to be the cause of most computer errors, wouldn’t the computer view us the same way we view something like smallpox for example?

Hi Stephen, thank you for the comment.

I think there is room for argument on the subject but when I think of a vastly intelligent being I don’t think about it viewing humans as a virus.

I think about it wanting to raise-up all its charges and colleagues. Working together to make a glorious world where immortal humans and their creations stand astride the galaxy in an everlasting Utopia.

Some people think I’m optimistic. 😉

Tom

I think this is a really positive and optimistic outlook, which is great, but I highly recommend you read this article here on the subject.

http://www.defenseone.com/technology/2014/04/why-there-will-be-robot-uprising/82783/

Granted it is an article published on a website for defense news, but the points are still valid and pertinent on their own. In the end, artificial intelligence (as we know it and build it) will not feel human emotion or derive “satisfaction”, but make all of its decisions in a purely calculative and logical way. Nothing more than cost/benefit analysis, and probably the most “human” component of AI could potentially be its desire for survival.

Hi Joe, thank you for the comment.

I haven’t read the article yet but I think it’s a legitimate argument.

I’m of the opinion that such an intelligence could not simply be a calculating machine but must, by its very nature, understand far more, what we call emotions and feeling.

Even then, a calculating machine does what it thinks is logical, the best thing, and war with its creator, even if ultimately victorious seems a hollow victory.

I’ve been wrong before!

Come back any time.

In the meanwhile I will peruse that article,

Tom

I agree in that one can’t assume with any certainty that an AI will be entirely “calculative and logical,” but I don’t necessarily think one can argue that the complexity of a machine and its resulting ability to understand “what we call emotions and feeling” will inherently entail an intelligence’s being subject thereto. Our emotions and neural reward systems are necessary to reinforce the behaviors made necessary by our status as biological beings, and I fail to see why an AI would inherently be subject to such impulses.

Furthermore, there’s already an example of a different, yet equally sophisticated type of mind that endeavors to achieve goals with little concern for the emotions or interests of others, despite featuring the requisite degree of emotional intelligence. They’re called psychopaths, and there’s no question that they’re a threat to the rest of humanity.

Understand that I’m not entirely discounting the possibility of either extreme regarding the outcome of advanced AI. I’m simply concerned that the reasons for optimism and alarmism aren’t very far apart, in this case.

Hi Nathan,

Thank you for the comment!

I certainly can’t say either of the two ideas are positively wrong or right; and there is nothing wrong with examining, as Hawking and you suggest, the possibility of a great Artificial Intelligence deciding humans are waste of time.

I’m just optimistic of the future of humanity. We’re going through a rough patch, that much is certain, but I see wonders on the horizon.

I do disagree with the idea that sociopaths (that’s what they call them these days) are like computers.

In my opinion sociopaths not only lack empathy with other people but their ability to analyze long-term benefits are really, really bad. They are all about the short-term gain, which I don’t think an AI would be.

This naturally leads them to long-term problems, like being on death-row!

Thanks again for the comment, Nathan, come back anytime!

Tom

The only problem I have with your argument is when you say winning instead of destroying your opponent, then you go on to say try to best them next time. Isn’t this a sort of destruction/warring with your opponent? Or am I completely missing your point? Insight would be appreciated thanks!!

Hi Someone,

Thank you for the question!

I’m going to answer with a long-winded story. I hope you will bear with me.

I was playing a vigorous game of two-hand shove football with a group of about twenty guys and it was a close game. One team had a few better athletes but my team was playing a smart zone defense.

We played for about an hour and it came down to one final play. My team lost but it was an exhilarating game.

On the way back to our cars one my friends asked why I seemed so happy in that we lost. I told him that such an evenly matched contest where both teams are playing hard and fair is rare and to be savored.

Capturing that moment of perfect competition is far more important than actually winning.

I would go back and play in that game everyday as opposed to playing matches where I was assured victory.

When we try our best against a tough and equally hard-working opponent both sides are elevated beyond what either could have accomplished alone.

I believe this is true in life as well. Victory is not the goal. The goal is to be the best me I can be.

To write the best book I can write. To make the best website I can make. If a competitor beats me, hats off to them, but they haven’t seen the last of me!

I hope this makes some sense?

Thanks again for the comment,

Tom

Indeed our goal should be to reach the kind of society you describe. It’s doable (I think) through computers and human enlightenment. If we are cautious, we can avoid the dangers that Hawking warns of. I note, however, that you seem to be a libertarian. I think there are too many griefers, trollers and lowlifes among us to have an enlightened society unless sustainability (e.g. population control) is forced on people. Utopia will have no privacy or ‘freedom’ because rule-breaking can’t be tolerated in its fragile system.

Hello Rick,

Thank you for the comment.

I am a Libertarian and I’m happy you think the dream I lay out is achievable!

I’ve spoken in the past about how educating women is the key to population control rather than anything forced by a government. I agree that a stable population is a huge help in reaching our goal.

In my Utopia, I suppose in the Libertarian Utopia although I hesitate to speak for others, more and more people share the credos of personal responsibility.

I agree that we will never have everyone who follows this ideology but I’m believer in percentages. We just need to keep trying to get a greater and greater percentage of the total to believe.

At some point the scale is tipped and the need for police and government becomes minimized.

Perhaps I’m a dreamer,

Thanks for stopping by and come back any time!

Tom

Pingback: Proof That Stephen Hawking Has Seen the 'Terminator' Movies | Robot Butt